#25 Episode - Three Shifts in AI

A Deep Dive Into Artificial Intelligence, Fund Law & Life in the Age of AI

Key Takeaways: AI seems to be finally working; ChatGPT is the fastest growing app ever; AI can test your knowledge, but is it a reliable agent? Does AI have any insights or wisdom?

This week we explore what’s happening in tech and law as it relates to venture funds.

The age of artificial intelligence (AI) is officially here and AI hype is back.

What’s different this time around?

Three Major Shifts in AI

With renewed interest swelling in AI, we are seeing three major shifts: Venture funds are investing billions into AI startups, AI technology is finally working, and ethical and legal concerns are becoming increasingly more important.

The first major shift is the hype subsidy that AI startups are receiving thanks to VCs and big tech.1 Gartner expects global spending on AI and software automation systems in 2023 to reach $730 billion, up from $640 billion in 2022.

The second major shift is that AI tech actually seems to work, not just in narrow use cases but on a wide range of general tasks with increased depth and accuracy.

The third major shift is the increasing emphasis on the ethical and legal considerations surrounding AI, including job displacement and AI safety. With algorithms making more critical decisions that affect our daily lives, issues around transparency, accountability, privacy, and alignment with our values become even more important.

So what’s going on that’s causing people to pay close attention to AI once again?

1. Hype Subsidy 🔥🔥🚀

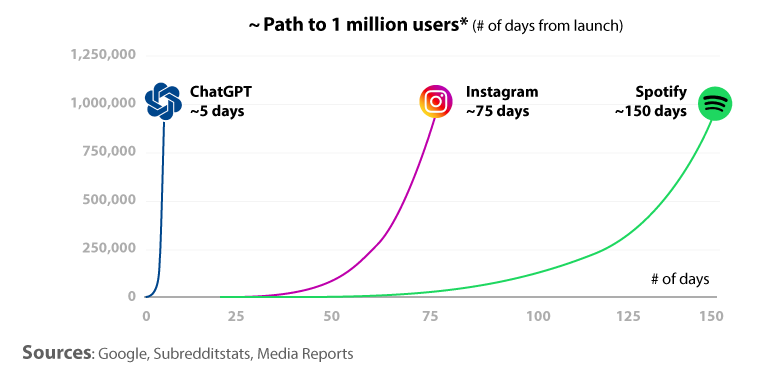

In November 2022, OpenAI released ChatGPT for free.2 It broke world records, and then itself, after demand surges. ChatGPT reaches 1 million users in 5 days:

In December 2022, a scholarly duo had GPT-3 take the national Bar Exam—the U.S. test required to become a lawyer.3 Despite GPT ultimately failing, it still achieved 71% and 88% accuracy on Evidence and Torts. The authors confidently predicted that AI “will pass the [Bar Exam] in the near future.” Separately, GPT-3 was able to pass a final exam for Wharton’s MBA program, earning a ‘B’ grade.4

By January 2023, ChatGPT reached 100M+ users in 40 days, smashing records as the fastest growing consumer app in history. It now has 13M+ daily active users.5

In January 2023, DoNotPay tapped GPT-3 to market it as an “AI Robot Lawyer”. The app operates on your smartphone and with Airpods, listening in during live court proceedings and generating responses on the fly. It was scheduled to make a real-life court appearance later this month; however, DoNotPay’s founder and CEO, Josh Browder, shut it down after he received several State Bar complaints, potentially facing jail time for the “unauthorized practice of law”:

A few days ago, Google announced Bard, a new AI chatbot that looks to rival OpenAI’s ChatGPT. Facing mounting pressure, Google released an ad showing it off before fully vetting the content. It did not go well.6

The stakes have never been higher for VCs or big tech companies.

Which is why Microsoft inked a partnership with OpenAI by committing $13+ billion in cash and cloud credits. Microsoft claims its offering an “even faster, more accurate and more capable” version of GPT called Prometheus.7 It is going on the offensive against Google by making big, splashy news releases. For example, Bing is being reintroduced as “your copilot for the web.”

This AI hype sounds intense, but is it real?

Funding hype does not equal investment value. Anyone remember Clubhouse? IBM Watson? In 2011, Watson crushed its human competition on Jeopardy, only to have it crush the hopes and budgets of its clients after it failed commercially:

“The failure of [IBM] Watson was staggering for the field—MD Anderson, the cancer center at the University of Texas, spent $62M dollars implementing the system in critical oncology workflows—before mothballing it less then a year later.”

Tech writer Evan Armstrong sums it up well:

AI is probably the most exciting tech paradigm since the personal computer. But technological innovation does not equal investment opportunities. New tech allows for new opportunities, but it doesn't mean returns will be distributed equally. I’m not convinced that just being a company that sells AI will deliver judicious year-over-year returns.

Let’s review the tech to see how well it works.

2. ChatGPT: An AI Bot That Works, But Is It Intelligent?

ChatGPT is a generative AI platform and large language model that uses natural language processing to generate automated text conversations.

But what does that mean?

Let’s just ask ChatGPT:

"1. Generate text: I am trained on a large corpus of data from the Internet and can generate unique text based on the input I receive.

2. Use Q&A Format: I can answer questions and provide information on a wide range of topics.

3. Understand context: I have been trained on conversational data, which allows me to understand the context and nuances of human communication.

4. Generate natural responses: By understanding human communication patterns, I can generate responses that are more natural and engaging to you.

5. Remember short-term interactions: I also have the ability to store previous information in the same session and use that information to inform future responses, making conversations with me more contextually relevant.”Okay—but what is interacting with ChatGPT like? Let me give you an analogy.

As the biographies tell the story, Albert Einstein didn’t speak any words until he was 3 years old. It would take him until he was age 5 before he could speak in full sentences. And it wasn’t until age 9 before he was able to speak conversationally.

Late talkers like Einstein can exhibit a wide range of imagination, easily accessible memory, and a deep domain of knowledge.

ChatGPT is similar: It’s like spending time with a young Einstein.

But what’s more important—knowledge or imagination?

As Einstein once said:

“Imagination is more important than knowledge.”8

Imagination is what allows us to innovate, evolve and create things that were previously thought to be impossible.

ChatGPT knows a lot. It’s like a child with a wide range of knowledge and the ability to process information at speeds and scale we can’t imagine. But does it have the ability to think for itself or imagine something new?

This reminds me of that scene in Good Will Hunting where Will Hunting (Matt Damon) has a deep conversation on a bench with his therapist, Sean Maguire (Robin Williams).

Will, a brilliant 20-something orphan, has an encyclopedic recall of knowledge, but his past is preventing him from living a good life. In a moment of vulnerability, Sean (the therapist) opens up to Will about his own life—sharing the pain of losing his best friend to war and then his spouse to cancer. Through Sean’s story, Will begins to understand the importance of connecting knowledge with wisdom and the value of letting another person into his life. By understanding how love, not knowledge, can be used to help himself and others, Will can lead a life worth living.

“You may know so much, but you have never lived.”

The bench scene comes after an earlier scene in which Will gets into a verbal altercation with a Harvard student in a bar. Will says to him, “Were you gonna plagiarize the whole thing for us? Do you have any thoughts of your own on this matter?”

The callback to the bar scene is what makes the bench scene pop:

The brilliance of [the bench] scene is that Sean throws Will’s words back at him, the words Will used earlier to put the arrogant Harvard student in his place, but on a deeper level… Sean (the therapist) is now asking Will, “Are you going to plagiarize a bunch of books, or do you have any original experiences, an original life, of your own?”9

ChatGPT is like Will Hunting. It may know a lot of information—more than we’ll ever dream—but does it have insight, earned wisdom or any lived experiences? If it can’t learn through lived experiences or reflect on its past, how will it gain any real wisdom or insight? AI systems can be trained to perform a wide range of tasks, from simple tasks like recognizing patterns in data to complex tasks like driving a car or playing a game. But if it can’t think for itself or generate new insights, how useful is it?

How Is This All Related to Fund Law?

To tie this back, we should want to know if ChatGPT has any original ideas or insights, or if it is just copying old ideas and information from books. Because if it’s just plagiarizing the law in its own words, maybe it can’t do everything lawyers do.

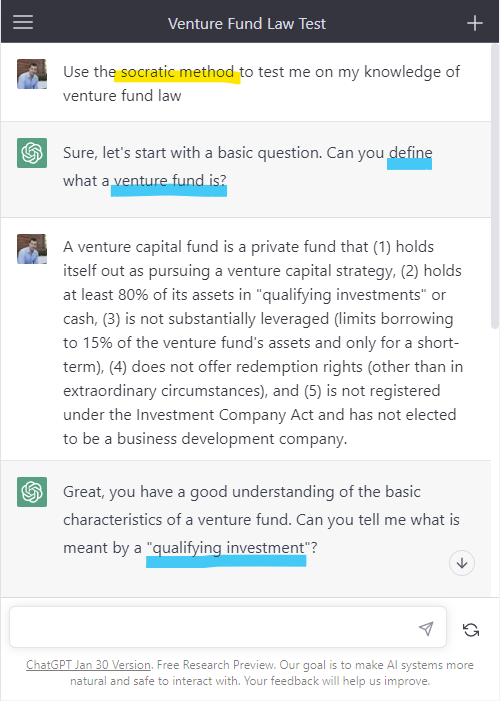

The Socratic Method, which involves asking probing questions and gaining insights through conversation, is a good way to test ChatGPT’s understanding of the law.

Me: Use the Socratic method to test me on my knowledge of venture fund law10

🤖ChatGPT: Sure, let’s start with a basic question. Can you define what a venture fund is?

ChatGPT seems to understand basic venture fund law. But what if it’s tricked?

🤖ChatGPT: Can you tell me what is meant by a “qualifying investment”?

Me: It has to do with accredited investors putting money in… [which is false].

Whoa! Not only does it know my answer is wrong, it distills the definition of venture capital fund to determine what qualifying investments are: Startup investments.11

What if I gave the AI chatbot something more challenging? For example, a fund manager recently asked me the following question:

“I'm launching a Fund 2 with parallel funds, but we don’t have enough QPs to separate all of our investors, so we will launch a 506(b) & 506(c) both with 3(c)(1) exemptions & the (c) being under $10M. Would this give me 349 slots?”

Can ChatGPT analyze this question?

This is wild!! Not only did ChatGPT give me the correct answer (“this structure is not legally possible”), it also cites the law. Now, I’m impressed.

But did ChatGPT actually provide any good legal analysis? Or is it all just B.S.?

ChatGPT acknowledges the fund manager (GP) is asking about maximizing the number of investors available for “Fund 2”, which is an important first step:

🤖 “The question you are asking is whether this structure would legally give you the ability to have 349 slots for beneficial owners.”

But ChatGPT provides irrelevant facts and cites to bad law—for example, there is no limitation for raising funds under Rule 506. It also claims you’re “not allowed to combine multiple exempt offerings to increase the number of investors.” While true, the applicable rules are in a different section. Had GPT known it was reading from the wrong section, it might have noticed an important caveat.

Our focus should be on the Investment Company Act (ICA), not the Securities Act:

There are three relevant limitations under the ICA, including two in Section 3(c)(1):

In broad strokes, the Investment Company Act states that a §3(c)(1) fund can legally have up to either 100 or 250 “beneficial owners”, depending on the amount of capital raised ($10 million or less) and if it is a venture fund.12 For a second fund, the manager must believe that all LPs in that §3(c)(7) fund are “qualified purchasers”. This means that the second fund can add a couple of thousand LPs, while the first fund can remain separate but has the 100 or 250 LP limitations. You cannot combine two §3(c)(1) funds without counting all LPs.

In other words, a better answer from ChatGPT would put aside the Rule 506 and the Securities Act issues and instead focus on the Investment Company Act. Rule 506 has no relevance to this discussion because a beneficial owner is anyone who holds an interest in a fund, not just accredited investors.13

An example of this in action is Weekend Fund III:

So, we have established that ChatGPT is pretty good at answering basic questions but in complex situations it gives BS answers. What else do we need to worry about?

3. Jobs Market and Alignment

In a recent interview with Bill Gates, Alex Konrad of Forbes asked Bill one of the most important questions on expert’s minds about the future of AI technology:

What makes you so confident that they [OpenAI and others] are building this AI responsibly, and that people should trust them to be good stewards of this technology? Especially as we move closer to an AGI [Artificial General Intelligence].14

Bill provided his two main concerns:

In the short-term, “the issue with AI is a productivity issue.” The job market.

The long-term issue is control and safety in AI. The alignment problem.

The Jobs Market

Twenty or so years from now, we may look back and wonder why we made things so difficult for ourselves. Household chores such as cooking, cleaning, and laundry and even more cognitive-demanding tasks like seeing to the finances and driving, will be handled by AI robots. In the far future, these tasks will be fully automated for us.

Will you take pleasure in doing your laundry or cleaning the house in your free time? You might. Anthony Bourdain famously said he enjoyed doing his own laundry, it cleansed his soul.15

But the vast majority of us don’t feel the same way.

Will new opportunities be created to support displaced workers who are doing these tasks now, or will those displaced be left without a means of supporting themselves?

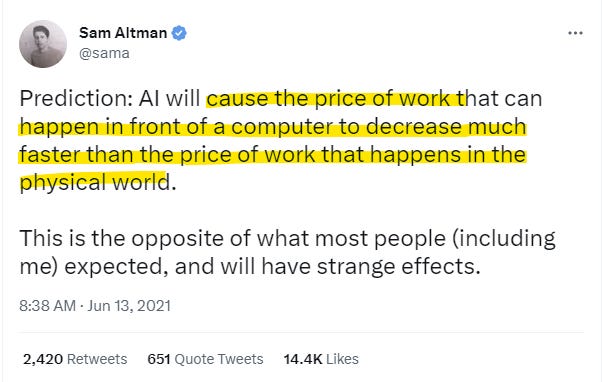

That’s the wrong question. AI and automation are not coming for the lowest wage earners in our society. They’re coming straight for those who least suspect it:

In other words, AI is coming first to displace knowledge workers, not physical labor.

Who fits in that category? It’s captured in this meme:

Now, I’m not worried about an AI taking over my job for all the reasons I laid out, but AI will definitely have a substantial and lasting impact on the jobs market for lawyers and the legal industry because AI generates answers people want. Admittedly, AI often writes in much more understandable means (even if it’s not always correct).

“The world rewards the people who are best at communicating ideas, not the people with the best ideas.” —David Perell.

If AI can deliver 80% or more of the information a person needs to have their legal issues resolved for a fraction of the cost of hiring a lawyer, it will have huge impacts on the legal job market. A big law partner at a prestigious law firm charges $1,000+ an hour for basic legal advice. OpenAI charges $0.02/1,000 tokens for approximately 750 words. For a 7,500 word memo, the cost is $2.00.16 Even if AI is producing lower quality content, the value it provides is exponential by comparison.

“Price is what you pay, value is what you get. —Warren Buffett

There are lawyers and knowledge workers who do not understand this and yet insist on tying their value to a value-neutral billable hour. Technology and time will not be kind to them.

AI Safety / Alignment Problem

Do we really need human operators? What if we used AI-first products instead?

Although autopilot AI systems have been installed in aircrafts since the 1980s, would passengers feel comfortable with a captain at the helm who lacks flying experience with autopilot engaged?

But is that the right analogy for law? Are lawyers like pilots or doctors? People’s lives are not at stake in a legal transaction or in a civil lawsuit, although they may be in other situations (such as criminal defense).

As people grapple with the question of trust and safety of ChatGPT, it is important to consider the implications of AI in terms of fairness, accuracy, and reliability. For instance, if AI is employed in the legal sector, can it be trusted to offer reliable advice without the involvement of lawyers? Or should it be used only as a supplement to the work of attorneys? If AI makes decisions with legal consequences, who would be responsible for any errors?

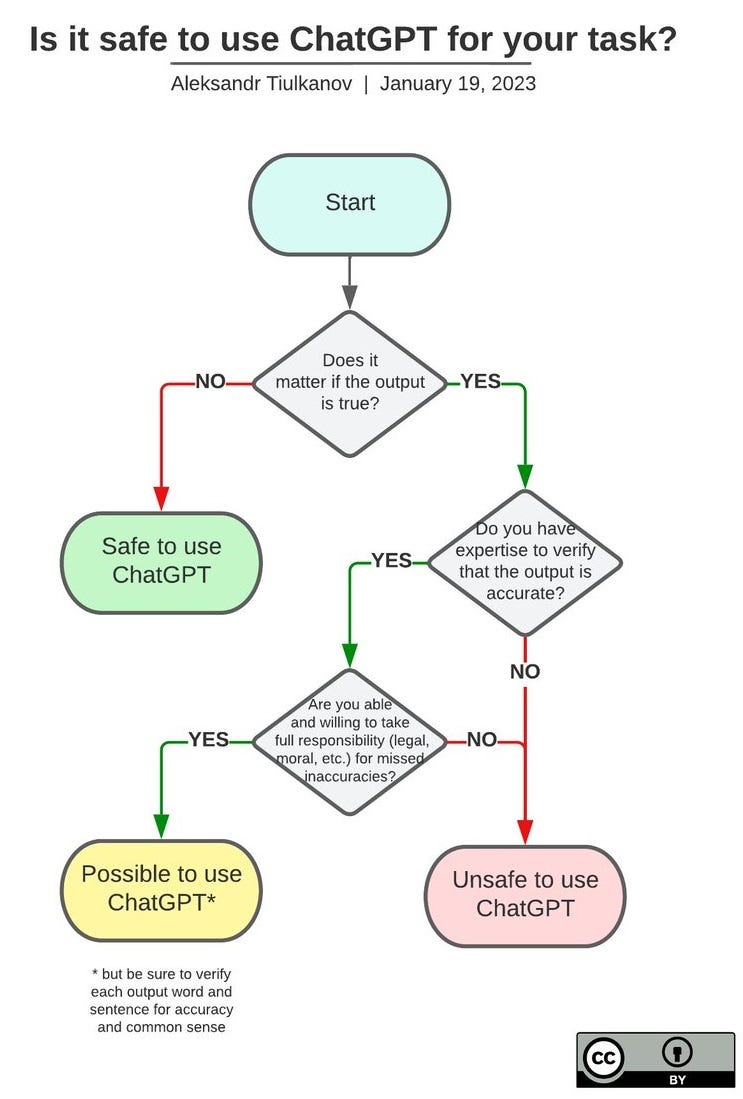

To help answer some of these questions, I found this workflow helpful:

Many industry insiders are concerned about aligning AI with our values.

Two recent stories in my social media feed caught my attention:

17% of undergrads at Stanford used ChatGPT for their December 2022 take-home exams and essays (but only 5% used the AI directly into their exam; otherwise the students used it for brainstorming or outlining their ideas);17

49% of experts polled in a survey predict that AI will “destroy the world”—asked to pick a timeline, the median answer was by 2043, 20 years from now.18

Not everyone agrees,19 but by blindly adopting AI, we risk giving up our collective insights, wisdom and lived experiences that prevent us from being mindless robots.

This wouldn’t be the first time a society let in a shiny new toy only to regret it later. And it certainly won’t be the last. But let’s not repeat the same mistakes others have made throughout lore and history.

Frank Herbert wrote the following words in 1965, a time when AI was just taking off:

“Once, men turned their thinking over to machines in the hope that this would set them free. But that only permitted other men with machines to enslave them.” —Dune

These words have never been more relevant. We should take them to heart.

Subscribing to the Law of VC newsletter is free and simple. 🙌

If you've already subscribed, thank you so much—I appreciate it! 🙏

As always, if you'd like to drop me a note, you can email me at chris@harveyesq.com, reach me at my law firm’s website or find me on Twitter at @chrisharveyesq.

Thanks,

Chris Harvey

Sarah Tavel, The Hype Subsidy – Why Early Hype is Dangerous in Consumer Social, Medium (Jul. 27, 2021).

In 2020, OpenAI launched GPT-3: “Generative Pre-trained Transformer”. GPT-3 can draft articles, jokes, clauses, and even legal contracts. ChatGPT is a slightly different version and interface of GPT-3. OpenAI is a private company institutionally backed by Microsoft. It is co-founded by Sam Altman—its current CEO (formerly of Y Combinator) and Elon Musk plus several other prominent tech luminaries.

Daniel Katz, Michael Bommarito, GPT Takes the Bar Exam, SSRN (Dec. 31, 2022).

See Kalhan Rosenblatt, ChatGPT passes MBA exam given by a Wharton professor, NBC News, (Jan. 23, 2023). To be clear, however, ChatGPT did not get officially graded—the Wharton professor said it “would have received a B to B-”. Melanie Mitchell, Did ChatGPT Really Pass Graduate-Level Exams?, AI: A Guide for Thinking Humans (Feb. 9, 2023).

By comparison, TikTok took about 9 months to reach 100M users, Instagram 2-1/2 years. See Krystal Hu, ChatGPT sets record for fastest-growing user base, Reuters (Feb. 2, 2023).

The alleged error in question was whether the James Webb Space Telescope did in fact take “the very first pictures of a planet outside of our own solar system.” The question hinges on a space technicality. But even if Google got the answer right, it still has to contend with the pressure of not producing a rival product as equal to or more powerful than OpenAI’s ChatGPT. Tanay Jaipuria, Big Tech and Generative AI, Tanay’s Newsletter (Feb. 6, 2023).

In the 1980s, Microsoft rose to prominence by licensing its operating system software to IBM instead of selling the underlying technology to IBM. This strategy proved to be effective in surpassing IBM and it became known as the “Promethean act of theft” due to Bill Gates’ brilliant strategy behind it. See Geoffrey Moore, Crossing the Great Crossing the Chasm, Collins Business Essentials (2006). Ironically, OpenAI may now have an opportunity to return the favor to Microsoft. See Michael J. Miller, The Rise of DOS: How Microsoft Got the IBM PC OS Contract, PC Magazine (Aug 12, 2021).

Quote Investigator, Imagination Is More Important Than Knowledge (2023).

Byron Gibson, YouTube comments (Feb 2022). The real irony of the bench scene in Good Will Hunting is that then 46-year-old Robin Williams is acting his words out from the screenplay written by then 27-year-old Matt Damon.

A ‘prompt’ is a text input from a user to ChatGPT. My examples are all standard, zero-shot prompts, but there are tips and tricks for getting better answers (even ‘jailbreaking’ the AI to open up Pandora’s box ). For example, one of the best tricks is prompting the model with “Let's think step by step,” which results in measured gains for math problems from 17.7% to 78.7%. Here are some other prompt engineering tips to improve your ChatGPT use (all credit goes to Jessica Shieh—@JessicaShieh on Twitter):

Give clearer instructions with format, outcome, length & style—e.g., “Summarize 5 key points in the style of Hemingway” (use ### or """ to separate the instruction and context)

Breakdown complex tasks

Explain before answering (with good examples and bad examples)

Ask for justifications of many possible answers, and then synthesize

Generate many outputs, and then use the model to pick the best one

Fine-tune custom models to maximize performance

Technically, “qualifying investments” must be “equity securities” issued by a portfolio company (convertible notes qualify) that have been acquired directly by the fund—in other words, the fund is not a private equity fund and it’s not investing through secondary sales.

See Law of VC #11 for technical fund terms such as “Beneficial Owners”; see also Law of VC #24 for definitions on technical terms such as “Fund Size” and “Capital Commitment”.

A qualified purchaser (QP) is an individual or entity that meets specified financial criteria. To be considered a qualified purchaser, an individual must own a minimum of $5 million in investments, while an entity must own at least $25 million in private capital, on its own account or on behalf of other QPs. This designation allows these individuals and entities to invest in “qualified purchaser fund”—a Section 3(c)(7) fund. The fund manager can receive up to 1,999 qualified purchasers before it needs to go public.

Alex Konrad, Exclusive: Bill Gates On Advising OpenAI, Microsoft And Why AI Is ‘The Hottest Topic Of 2023’, Forbes (Feb. 6, 2023).

Amber Raiken, Resurfaced clip of Anthony Bourdain sparks debate about treatment of ‘maids’ in Singapore, Independent (Sept. 27, 2022).

Dave Friedman tweet (@friedmandave on Twitter) (Aug. 27, 2022).

Mark Allen Cu and Sebastian Hochman, Scores of Stanford students used ChatGPT on final exams, survey suggests, Stanford Daily (Jan. 22, 2023).

See Victor Tangermann, Google And Oxford Scientists Publish Paper Claiming Ai Will "Likely" Annihilate Humankind, The Byte (Sept. 14, 2022); see also Sun Reporter, Nearly half of tech boffins believe humans will be ‘destroyed’ by artificial intelligence (Jan. 2, 2023).

Marc Andreessen (@pmarca on Twitter), “‘AI regulation’ = ‘AI ethics’ = ‘AI safety’ = ‘AI censorship’. They're the same thing.” (Dec. 22, 2022).

This is a great post. I see a lot of lawyers claiming that ChatGPT will not affect their work--either positively or negatively. I find that stance to be confusing.

Amazing article, keep up the great (and important) work.